While the industry is still immersed in the aftermath of the official release of the second-generation Snapdragon 8 mobile platform, the second day of the Snapdragon Summit 2022 brought us some special surprises. As in the past, the second day of the Snapdragon Summit focused on new platforms for audio, computers, game consoles, XR and other devices, but contrary to the norm, this year's new first-generation Snapdragon AR2 platform became the second product to trigger a topic after the second-generation Snapdragon 8 mobile platform, because it is closer to the AR devices imagined by many of us, and it also makes us see the future more clearly, and it becomes possible for everyone to seamlessly connect to the “metaverse” at any time and any place.

The first generation Snapdragon AR2 platform

The first generation of Snapdragon AR2 is Qualcomm Snapdragon's first platform designed for building AR hardware products. Before this, most VR devices used the Snapdragon XR series chips shared with VR devices. Although the performance of Snapdragon XR1/XR2 5G/XR2+Gen1 is mature enough in application and has become the preferred chip for many head-mounted devices. But for AR devices, XR devices cannot cover all the content required by AR, and there are also some excess functional support that can be corrected. Because of these parts that can be optimized, it is difficult for the platform of XR devices to reduce the volume. But in fact, AR and XR devices are very different at the application level. In the future, the relationship between the two is complementary rather than replacing each other. XR devices need to provide an immersive experience of “sensory deprivation” in a fixed place and a fixed range, while AR devices can be used as daily devices in more non-fixed scenes and richer usage environments. Therefore, it is very necessary to distinguish the core platforms of AR and XR devices.

The first generation of Snapdragon AR2 distributed design

It is worth mentioning that the first-generation Snapdragon AR2 platform uses the new 4NM technology, which is consistent with the second-generation Snapdragon 8 mobile platform. This technical process can ensure better computing speed and more efficient device energy consumption within a limited volume, and can effectively reduce and streamline the weight and volume of AR devices. At the same time, in order to further reduce the size of AR devices, the biggest difference between the first-generation Snapdragon AR2 platform and other Snapdragon platform products is that it adopts a multi-chip distributed processing structure, that is, the components of the first-generation Snapdragon AR2 platform are distributed in different locations of the device, rather than concentrated on a certain integrated motherboard location.

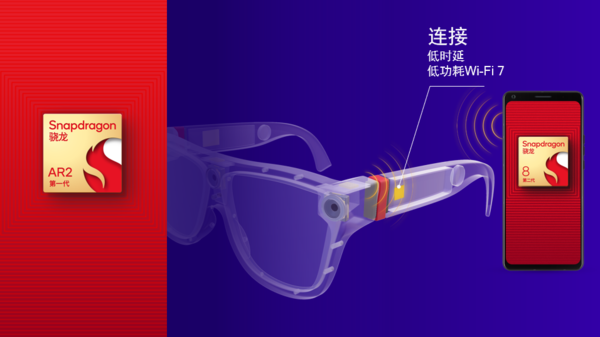

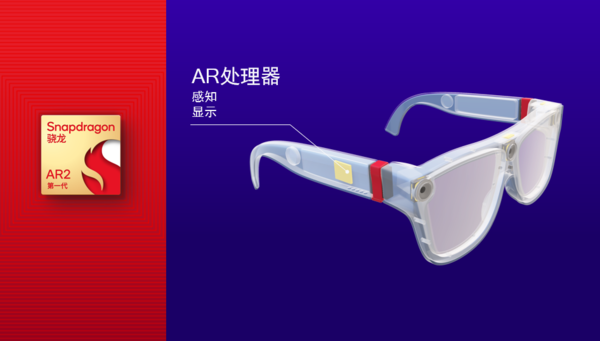

Such a design effectively improves the efficiency of the use of the internal space of the AR device, allowing it to carry more hardware support. The most direct manifestation is that the PCB area of the Snapdragon AR2 platform main processor in the AR glasses is 40% smaller than that of the Snapdragon XR2 platform, so that it can be placed on the eye legs. At the same time, its performance improves the overall AI performance of the platform by 2.5 times, reduces power consumption by 50%, and also enables AR glasses to achieve power consumption of less than 1W. In addition, the distributed structure also includes an AR coprocessor and a connection platform. The AR coprocessor is mainly responsible for aggregating camera functions to achieve effective collection and feedback of AI and computer vision. It can be placed in the middle of the glasses frame, while the connection platform can achieve low-latency and low-power Wi-Fi7 wireless connection, which can realize the placement of the other side of the glasses leg.

The first generation Snapdragon AR2 platform

The AR processor is optimized for low latency from motion to display (M2P), while supporting up to nine parallel cameras for user and environment understanding. Its enhanced perception capabilities include a dedicated hardware acceleration engine that improves user motion tracking and positioning, an AI accelerator for reducing latency for high-precision input interactions such as gesture tracking or six degrees of freedom (6DoF), and a reprojection engine that supports a smoother visual experience. The AR coprocessor aggregates camera and sensor data and supports eye tracking and iris authentication for visually focused rendering, thereby optimizing workloads only for content that the user is looking at. In this way, not only is space used more reasonably, power consumption is effectively balanced with functionality and weight, but it also prepares for AR devices to be free of annoying cables.

The first generation of Snapdragon AR2 platform distributed computing

Another design that has been praised is that the Snapdragon AR2 platform also applies distributed design to its computing methods. In order to achieve better effects and pictures, the Snapdragon AR2 platform will directly allocate latency-sensitive perception data processing to the glasses terminal, while more complex data processing requirements will be diverted to smartphones, PCs or other compatible host terminals equipped with the Snapdragon platform. This process is dynamic, so a better performance balance is achieved in the conversion of computing power.

It is not easy to realize this function, which requires strong synergy between multiple devices. This is one of the important functions of the connection platform. Through the Qualcomm FastConnect 7800 connection system, ultra-fast commercial Wi-Fi 7 connection can be achieved, making the latency between AR glasses and smartphones or host terminals less than 2 milliseconds. At the same time, it integrates support for FastConnect XR Software Suite 2.0, which can better control XR data to improve latency, reduce jitter and avoid unnecessary interference.

Good hardware requires good development software to facilitate developers to develop systems and applications on the platform. Devices using the Snapdragon AR2 platform can use Snapdragon Spaces XR, an end-to-end solution specially launched for developers covering hardware, a full set of perception technologies and software tools. Developers can use this development platform to create freely and build every fantasy place for the nearby “metaverse” world.

New second-generation Qualcomm S5 and S3 audio platforms

In addition to the Snapdragon AR2 platform, the 2022 Snapdragon Summit also launched the new second-generation Qualcomm S5 and S3 audio platforms, and demonstrated that Windows 11 PCs equipped with the Snapdragon computing platform can improve productivity, collaboration, and security anytime, anywhere, and have been recognized by enterprise-level users.

The first generation Snapdragon AR2 platform

What we can see is that after the release of the second-generation Snapdragon 8 mobile platform, the mobile phone industry will usher in a new round of major changes next year. At the same time, in terms of other terminals, Snapdragon has always been consistent and actively promoted the development of hardware in different fields. Now Qualcomm Snapdragon's platform solutions have spread across mobile phones, PCs, tablets, wearable devices, XR, smart cars and a variety of different industries and fields, and are actively promoting the collaboration and connection between different platforms. Each layout is extremely forward-looking and full of confidence. I believe that in the future, when the Metaverse really arrives, Qualcomm Snapdragon will continue to “add bricks and tiles” to build the basic settings of the Metaverse.

magicCubeFunc.write_ad(“dingcai_top_0”);